Problem

While working as a live audio engineer, a common problem I ran into while setting up for an event was verifying audio inputs and outputs between my gear and the pre-installed gear as quickly as possible.

Solution

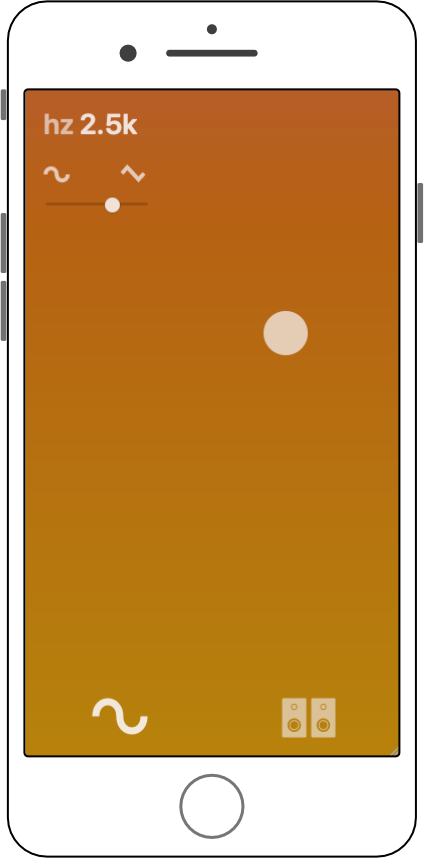

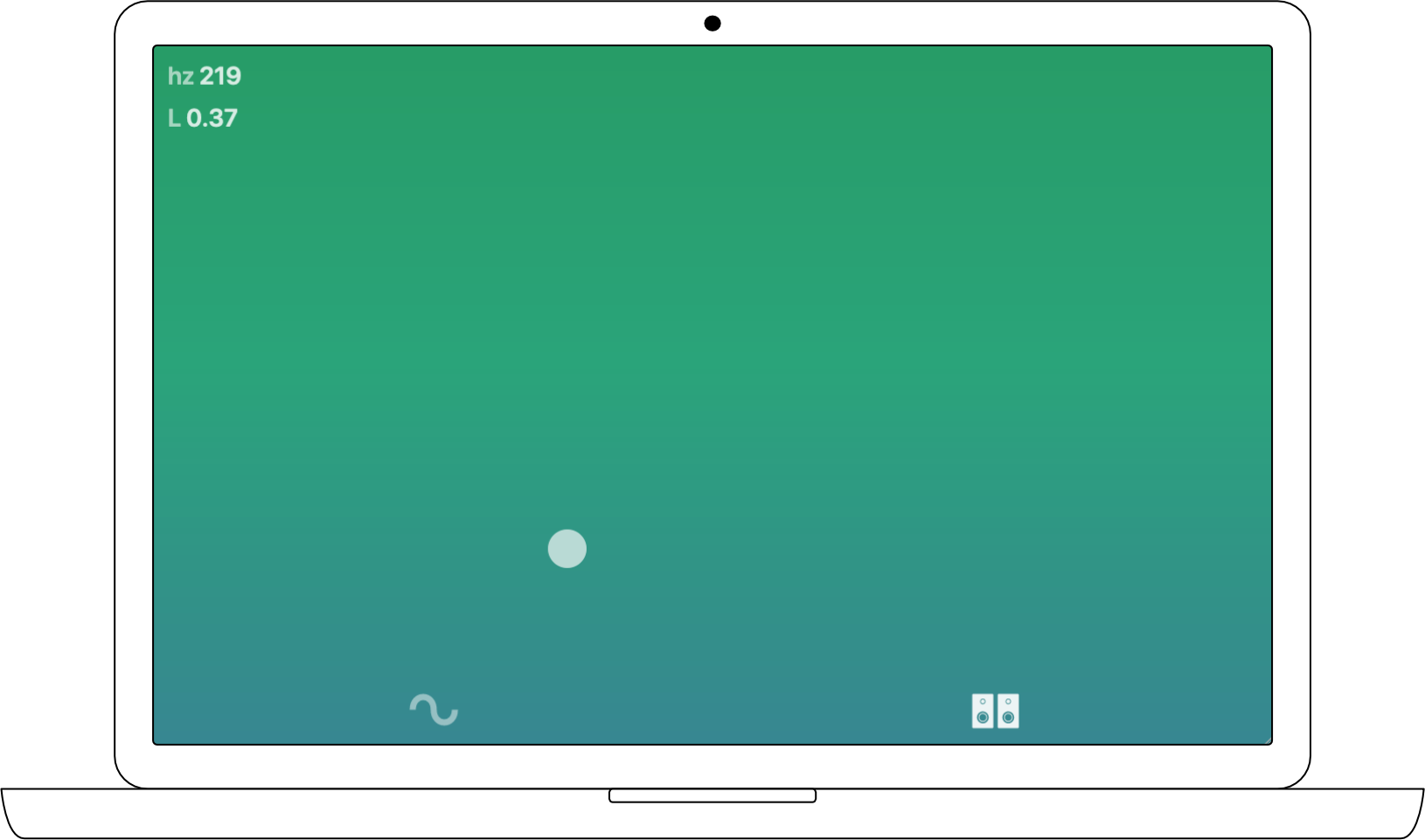

What started out as a simple university project actually became a very useful tool for me at work. Soundcheck provides a touch interface which synthesizes a tone based on the XY location of the touch in the viewport. For the X axis, there are currently two variables available to affect audio synthesis: stereo and waveshape. Touch input is scaled on a logarithmic curve for a better user experience, so users can access frequency bands more commonly used in sound-checking.

Haptic Animation

For this project I figured out how to animate color gradients as a function of mouse and touch input, create micro-interaction animations on mouse and touch events, and convert touch coordinates into audio data inputs.

Tone.js

I've used Tone.js previously for an audio-centric web app I completed, while on internship at MGH Baltimore, due to the simplicity it provides as a wrapper for the Web Audio API. The concept of serially chaining envelopes, synthesis and modulation effects comes somewhat naturally to me after making music in a Digital Audio Workstation for many years.